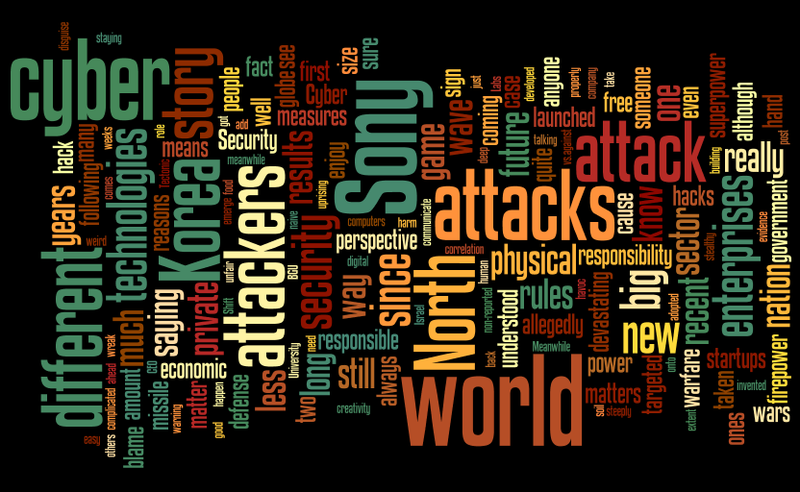

United We Stand, Divided We Fall.

If I had to single out an individual development that elevated the sophistication of cybercrime by order of magnitude, it would be sharing. Codesharing, vulnerabilities sharing, knowledge sharing, stolen passwords, and anything else one can think of. Attackers that once worked in silos, in essence competing, have discovered and fully embraced the power of cooperation and collaboration. I was honored to present a high-level overview on the topic of cyber collaboration a couple of weeks…