Chatbots are everywhere. It feels like the early days of mobile apps where you either knew someone who is building an app or many others planning to do so. Chatbots have their magic. It?s a frictionless interface allowing you to chat with someone naturally. The main difference is that on the other side there is a machine and not a person. Still, one as old as I got to think whether it is the end game concerning human-machine interaction, or is it just another evolutionary step in the long path of human-machine interactions.

How Did We Get Here?

I?ve noticed chatbots for quite a while, and it piqued my curiosity concerning the possible use cases as well as the underlying architecture. What interests me more is Facebook and other AI superpowers ambitions towards them. And chatbots are indeed the next step regarding human-machine communications. We all know where history began when we initially had to communicate via a command-line interface limited by a very strict vocabulary of commands. An interface that was reserved for the computer geeks alone. The next evolutionary step was the big wave of graphical user interfaces. Initially the ugly ones but later on it improved in significant leaps making the user experience smooth as possible but still bounded by the available options and actions in a specific context in a particular application. Alongside graphical user interfaces, we were introduced to search like interfaces where there is a mix of a graphical user interface elements with a command-line input which allows extensive textual interaction ?- here the GUI serves as a navigation tool primarily. And then some other new human-machine interfaces were introduced, each one evolving on its track: the voice interface, the gesture interface (usually hands), and the VR interface. Each one of these interaction paradigms uses different human senses and body parts to express communications onto the machine where the machine can understand you to a certain extent and communicate back. And now we have the chatbots and there?s something about them which is different. In a way it?s the first time you can express yourself freely via texting and the machine will understand your intentions and desires. That’s the premise. It does not mean each chatbot can respond to every request as chatbots are confined to the logic that was programmed to them but from a language barrier point of view, a new peak has been reached.

So?do we experience now the end of the road for human-machine interactions?? Last?week I?ve met an extraordinary woman, named Zohar Urian (the lucky Hebrew readers can enjoy her super-smart blog about creativity, innovation, marketing, and lots of other cool stuff) and she said that voice would be next which makes a lot of sense. Voice has less friction than typing, its popularity in messaging is only growing, and technological progress is almost there regarding allowing free vocal express where a machine can understand it. Zohar’s sentence echoed in my brain which made me go deeper into understanding the anatomy of the evolution of the human-machine interface.?

The Evolution of Human-Machine Interfaces?

The progress in human to machine interactions has evolutionary patterns. Every new paradigm is building on capabilities from the previous paradigm, and eventually, the rule of the survivor of the fittest plays a significant role where the winning capabilities survive and evolve. Thinking about its very nature to grow this way as the human factor in this evolution is the dominating one. Every change in this evolution can be decomposed into four dominating factors:

- The brain or the intelligence within?the machine – the intelligence which?contains the logic available to the human but also the capabilities that define the semantics and boundaries of communications.

- The communications protocol is provided by the machine such as the ability to decipher audio into words and sentences hence enabling voice interaction.

- The way the human is communicating with the machine which has tight coupling with the machine communication protocol but represents the complementary role.

- The human brain.

The holy?4 factors

Machine Brain <->

Machine Protocol <->

Human Protocol <->

Human Brain

In each paradigm shift, there was a change?in?one or more factors.

Paradigms

Command Line 1st Generation

The first interface used to send restricted commands to the computer by typing it in a textual screen

Machine?Brain:?Dumb and restricted to set of commands and selection of options per system state

Machine Protocol:?Textual

Human Protocol:?Fingers typing

Human Brain:?Smart

Graphical User Interfaces

A 2D interface controlled by a mouse and a keyboard allowing text input, selection of actions and options

Machine?Brain:?Dumb and restricted to a set of commands and selection of options per system state

Machine Protocol:?2D positioning and textual

Human Protocol:?2D hand movement and fingers actions, as well as fingers, typing

Human Brain:?Smart

Adaptive Graphical User Interfaces

Same as the previous one though here the GUI is more flexible in its possible input also thanks to situational awareness to the human context (location…)

Machine?Brain:?Getting smarter and able to offer a different set of options based on profiling of the user characteristics. Still limited to set of options and 2D positioning and textual inputs.

Machine Protocol:?2D positioning and textual

Human Protocol:?2D hand movement and fingers actions, as well as fingers, typing

Human Brain: Smart

Voice Interface 1st Generation

The ability to identify content represented as?audio and to translate it into commands and input

Machine?Brain:?Dumb and restricted to a set of commands and selection of options per system state

Machine Protocol:?Listening to audio and content matching within the audio track

Human Protocol:?Restricted set of voice commands

Human Brain:?Smart

Gesture Interface

The ability to identify physical movements?and translate?them into commands and selection of options

Machine?Brain:?Dumb and restricted to a set of commands and selection of options per system state

Machine Protocol:?Visual reception and content matching within the video track

Human Protocol:?Physical movement of specific body parts in a certain manner

Human Brain:?Smart

Virtual Reality

A 3D interface with the ability to identify a full range of body gestures and transfer them into commands

Machine?Brain:?A bit smarter but still restricted to selection from a set of options per system state

Machine Protocol:?Movement reception via sensors attached to body and projection of peripheral video

Human Protocol:?Physical movement of specific body parts in a free form

Human Brain:?Smart

AI Chatbots

A natural language detection capability which can identify within the supplied text the rules of human language and transfer them into commands and input

Machine?Brain:?Smarter and flexible thanks to AI capabilities but still restricted to the selection of options and capabilities within a certain domain

Machine Protocol:?Textual

Human Protocol:?Fingers typing in a free form

Human Brain:?Smart

Voice Interface 2nd Generation

Same as the previous one but with a combination of voice interface and natural language processing

Machine?Brain:?Same as the previous one

Machine Protocol:?Identification of language patterns and constructs from audio content and translation into text

Human Protocol:?Free speech

Human Brain:?Smart

What?s next?

Observations

There are several phenomenon and observations from this semi-structured analysis:

- The usage of the combination of communication protocols such as voice and VR will extend the range of communications between humans and machines even without changing anything in the computer brain.

- Within time more and more human senses and physical interactions are available for computers to understand which extends the boundaries of communications. Up until today smell has not gone mainstream as well as touching. Pretty sure we will see them in the near term future.

- The human brain always stays the same. Furthermore, the rest of the chain always strives to match the human brain capabilities. It?can be viewed as a funnel limiting the human brain from fully expressing itself digitally, and within the time it?gets wider.

- An interesting question is?whether at some point in time the human brain will get stronger if the communications to machines will be with no boundaries and AI?will be stronger.?

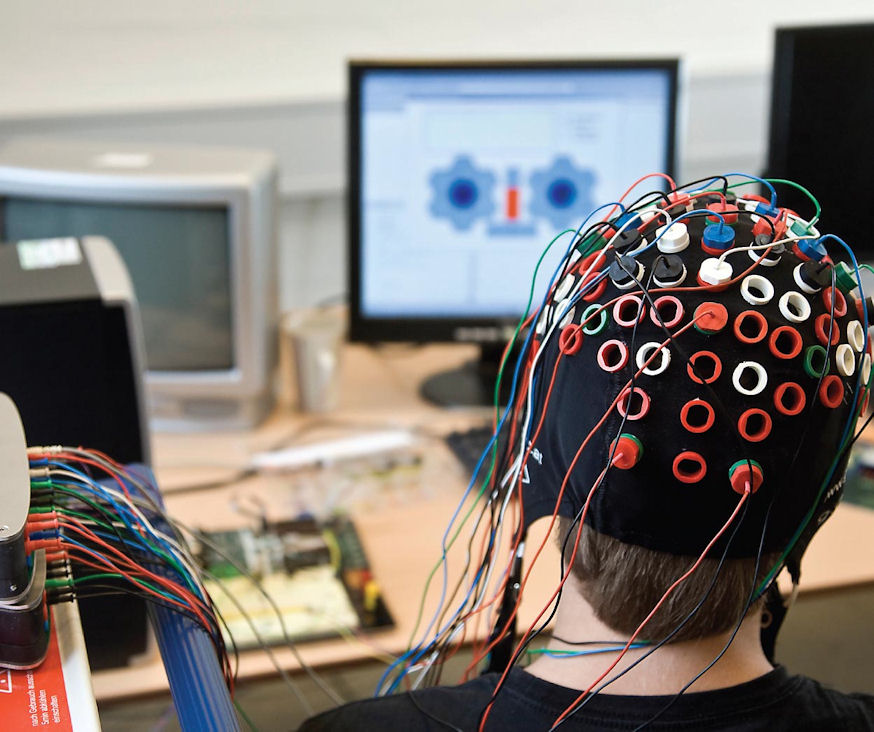

- We did not witness yet any serious leap which removed one of the elements in the chain and that I would call a revolutionary step (still behaving in an evolutionary manner). Maybe the identification of brain waves and real-time translation to a protocol understandable by a machine will be as such. Removing the need for translating the thoughts into some intermediate medium.?

- Once the machine brain becomes smarter in each evolutionary step then the magnitude of expression grows bigger – so there is progress even without creating more expressive communication protocol.

- Chatbots from a communications point of view in a way are a jump back to the initial protocol of the command line though the magnitude of the smartness of the machine brains nowadays makes it a different thing. So it is really about the progress of AI and not chatbots.

I may have missed some interfaces, apologies, not an expert in that area:)

Now to The Answer

So the answer to the main question – chatbots indeed represents a big step regarding streamlining natural language processing for identifying user intentions in writing. In combination with the fact that users a favorite method of communication nowadays is texting makes it powerful progress. Still, the main thing that thrills here is the AI development, and that is sustainable across all communication protocols. So in simple words, it is just an addition to the arsenal of communication protocols between humans and machines, but we are far from seeing the end of this evolution. From the FB and Google point of view, these are new interfaces to their AI capabilities which make them stronger every day thanks to increased usage.

Food for Thought

If one conscious AI meets?another conscious AI in cyberspace will they communicate via?text or voice or something else?