Imagine a futuristic security technology that can stop any exploit at the exact moment of exploitation?regardless of the way the exploit was built, its evasion techniques, or any mutation it might have or was possibly imagined to have. This technology is truly agnostic for any form of attack. An attack prevented with its attacker captured and caught red-handed at the exact point in time of the exploit…Sounds dreamy, no? For the guys at the stealth startup Morphisec?it’s a daily reality. So, I decided to convince the team?in the malware analysis lab to share some of their findings from today, and I have?to brag about it a bit:)

Exploit Analysis

The guys at Morphisec love samples like these because they allow them to test their product against what is considered to be a zero-day?or at least an unknown attack. Within an hour, the identification of the CVE/vulnerability exploited by the attack and the method of exploitation was already clear.

Technical Analysis

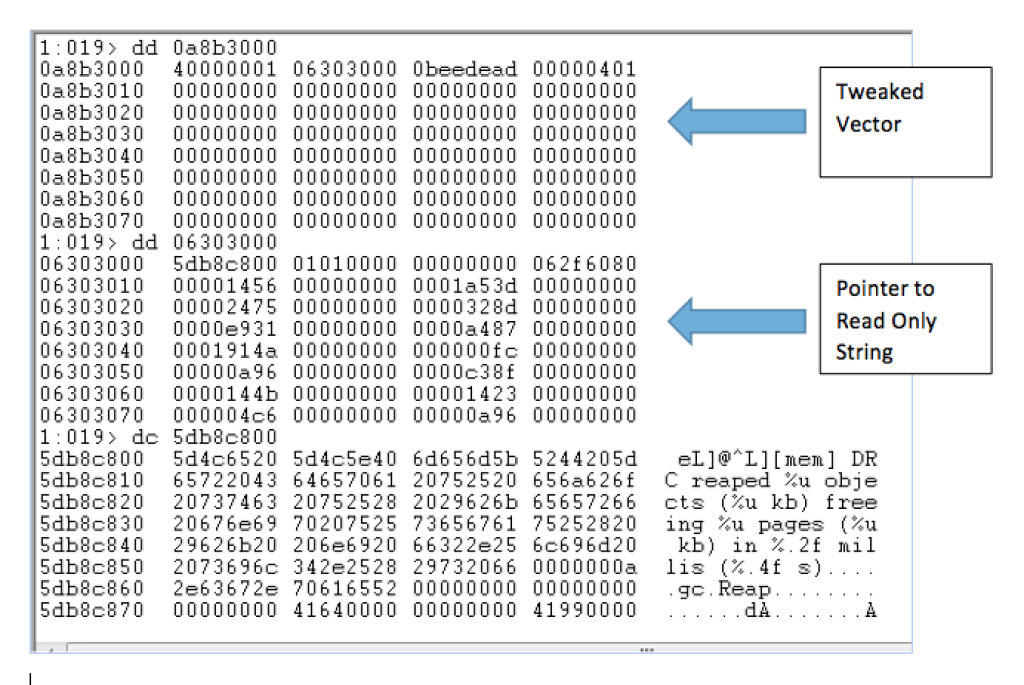

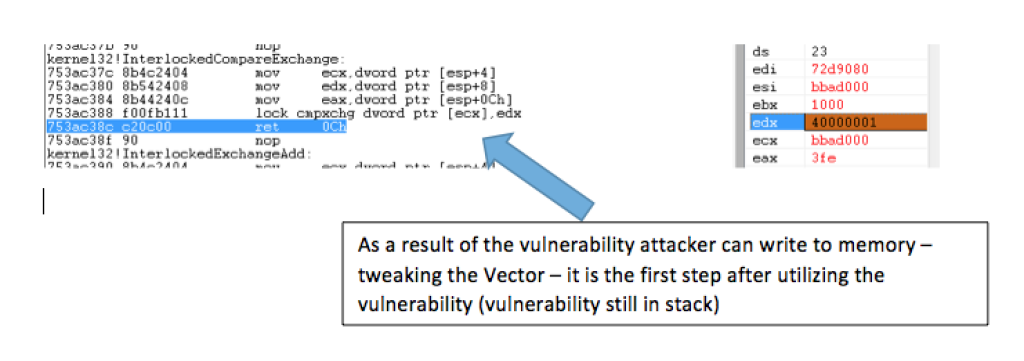

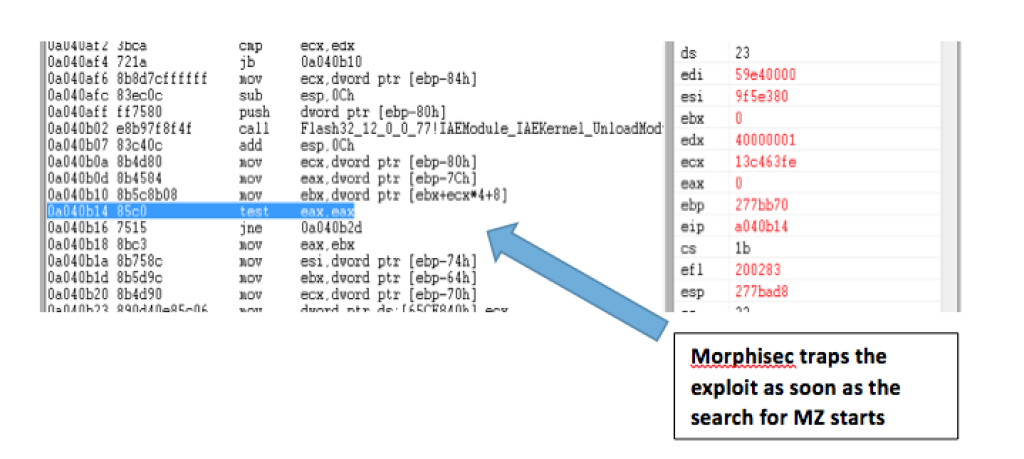

Morphisec prevents the attack when it starts to look for the Flash Module address (which later would be used to find gadgets). The vulnerability allows the attacker to modify the size of a single array (out of many sequentially allocated arrays ? size 0x3fe).

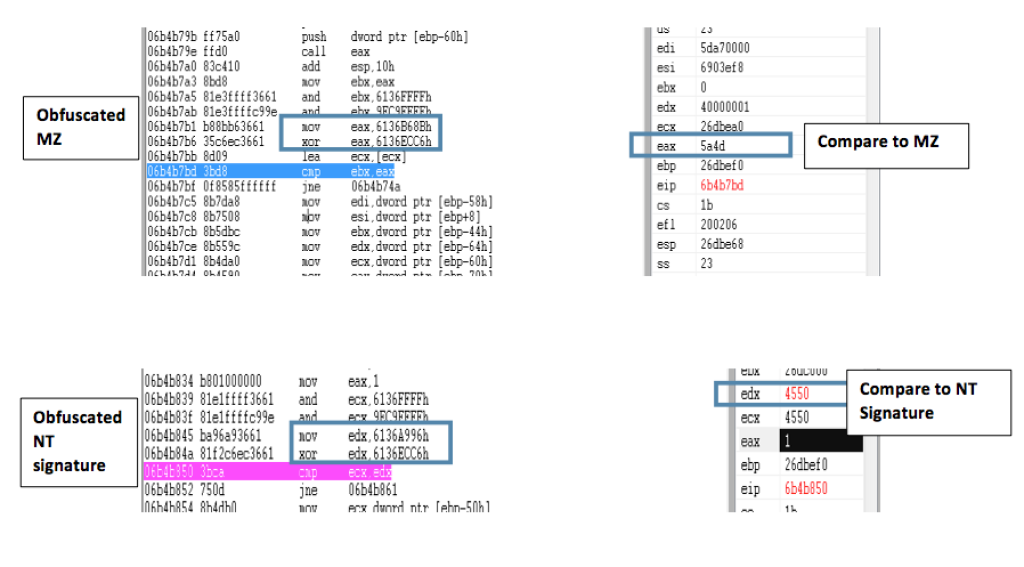

An array?s size 0x3fe (index [401]) is modified to size 0x40000001 to reflect the entire memory’s size. The first doubleword in this array points to a read-only section inside the Flash Module. The attacker uses this address as a start address for iteration dedicated for an MZ search (indicates the start of the library), each search iteration (MZ) is 64k long (after the read-only pointer that was leaked is aligned to a 64k boundary).

After the attacker finds the MZ, it validates the signatures (NT) of the model, gets the code base pointer and size, and from that point, the attack searches gadgets in the code of the Flash module.

Morphisec?s technology not only stopped it on the first step of exploitation, it also identified the targeted vulnerability and the method of exploitation as part of its amazing real-time forensic capability. All of this?was done?instantly in memory on the binary level without any decompilation!

I imagine that pretty soon the other security products will add the signature of this sample to their database so it can properly be detected. Nevertheless, the situation remains that each new mutation of the same attack makes the common security arsenal ?blind? to it?which is not very efficient.?Gladly, Morphisec is?changing this reality!?I know that when a?startup is still in stealth mode and there is no public information about such comparisons??it’s a bit ?unfair? to the other technologies on the market, but still? I just had to mention?it:)

I’v enjoyed reading your article and its seems interesting, but some facts bewilder me.

From what I understood Morphisec prevents the attack by identifying the search for the Flash module address, this causes 2 main problems:

1. Scanning the entire memory range looking for a specific value is a legal operation.

Many application do it, and most of them are not malicious. So would Morphisec identify them as malicious ? Bottom line there is no way to distinguish between valid memory scans and malicious ones, in the end both of them are just blocks of assembly code.

2. Traditionally memory scans are done by searching the entire memory range, thats easy to detect.

But what if attackers would adjust their tactics and do smarter scans.

In the end there are infinite ways to search the memory for a module.

e.g. Using the attack described above one might compromise multiple array objects, and read a single DWORD from each array.

instead of searching for MZ in the entire memory range, one would search for a specific DWORD value that is known to be located at a certain offset in the module.

More over, DLLs are normally loaded between a range of addresses and are page aligned. So the number of required DWORD’s also reduces.

Hi David,

Sorry for the late response – did not get the notification. See below comments regarding your questions:

>> I’v enjoyed reading your article and its seems interesting, but some facts bewilder me.

From what I understood Morphisec prevents the attack by identifying the search for the Flash module address, this causes 2 main problems:

>>>> Morphisec does not try to identify any search for Flash module base address or any search at all, it has no runtime component , Morphisec randomizes the memory layout in such way that the attacker assumptions about the memory layout become wrong. The prevention is based on policy , basically the attacker is free to find the gadgets / resources and we can prevent the execution of those, Moreover Morphisec includes multiple layers of defense , for those that succeed to bypass the anti exploitation techniques , they will be prevented on the shellcode stage (still without any runtime component).

>> 1. Scanning the entire memory range looking for a specific value is a legal operation.

Many application do it, and most of them are not malicious. So would Morphisec identify them as malicious ? Bottom line there is no way to distinguish between valid memory scans and malicious ones, in the end both of them are just blocks of assembly code.

>>>> The search itself is legal operation , the question is what are you looking and where, the attacker in many cases assumes specific assumptions about concrete offsets or data location only based on his running tests – a valid program can’t base it’s assumption on those specific fields simply because they might change based on different OS versions / application versions or even security mechanism like ASLR , or even patches that change the memory layout etc.. – if your valid program will crash after a small patch on the OS than your program has a problem.

>> 2. Traditionally memory scans are done by searching the entire memory range, thats easy to detect.

But what if attackers would adjust their tactics and do smarter scans.

In the end there are infinite ways to search the memory for a module.

e.g. Using the attack described above one might compromise multiple array objects, and read a single DWORD from each array.

instead of searching for MZ in the entire memory range, one would search for a specific DWORD value that is known to be located at a certain offset in the module.

>>>> In (1) i answered that the MZ search is not something we are trying to block – we are breaking the attacker assumptions in such way that the memory will randomly look different, whether you will search something DWORD by DWORD or by jumping 64k bytes you are still going over non used memory.

In case you would like more detailed info please connect with me directly via Linkedin and we can discuss further.

Best,

Dudu